Challenge

Mobile sensors, including wearable cameras, dashboard cameras, and smart glasses, are poised to revolutionize the way city workers and services operate, as well as how citizens engage with their urban environments. City workers can utilize wearable cameras and smart glasses to document their work activities in real-time, providing valuable insights into various municipal operations such as infrastructure maintenance, public safety patrols, and utility repairs. Similarly, dashboard cameras installed in city vehicles can capture crucial data during transit, such as traffic conditions, road quality, and environmental factors.

RnD Objectives

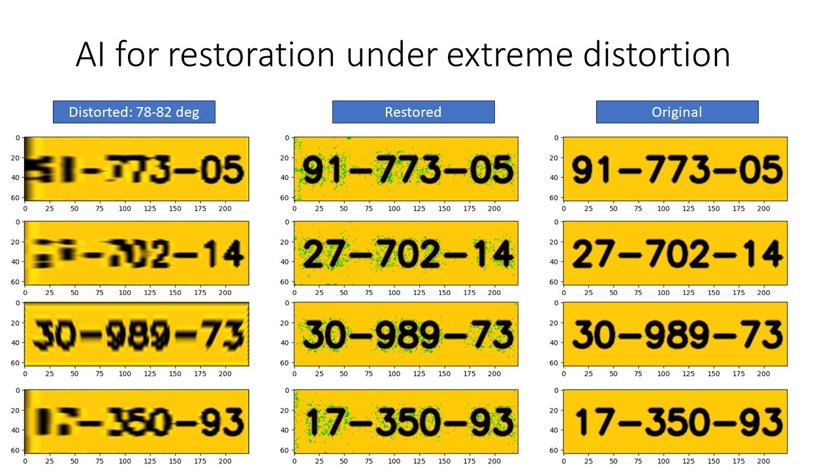

Advanced AI is essential for deriving insights from mobile cameras due to the inherent challenges posed by the casual nature of video capture, which often results in extreme distortions such as varying view angles, motion blur, and panoramic lens distortion. Traditional computer vision algorithms struggle to accurately interpret and analyze such distorted visual data, leading to errors and inaccuracies in the derived insights. However, advanced AI techniques, particularly those based on deep learning and convolutional neural networks, excel at handling these complex and diverse visual inputs.

AI for mobile sensors

Share a link using:

https://arabic.afeka.ac.il/en/industry-relations/research-centers/afeka-interdisciplinary-center-for-social-good-generative-ai/ai-for-mobile-sensors/WhatsApp

Facebook

Twitter

Email

https://arabic.afeka.ac.il/en/industry-relations/research-centers/afeka-interdisciplinary-center-for-social-good-generative-ai/ai-for-mobile-sensors/